Recently I’ve been working on loading a set of data (51 gig) into a rather simple db for use with generating some data for later on down the application pipeline. Speed was very important as we only have a certain time window to process the information. To store the data we originally looked at an Oracle solution (since we have a good bit of it already in-house) but decided against it because of cost. The decision was then made to go with SQL Server.

Let me tangent here and state that in many corporations the choice of things such as DB are outside the scope of the development team because the product has to be easily supported by the corporate infrastructure. This does make a lot of sense in that you want people who already know what they are doing to be able to support the system. Hiring more people or retraining is sometimes just not an option. We wanted to go free-ware (MySql, PostgreSql, etc) but we had no one to support it. Ok, back to the story. So we write the code, optimize the heck out of the code (caching with EhCache, pre-loading things, staging tables, etc) and the performance is decent. Our bottleneck at this point becomes the db itself. We are only getting about 1000 rows a second inserted. The reads are decent but mainly because we optimize the reads so it occurs infrequently. Well, what other options do we have? And how should we test?The Test

For my test I did the following:

- All tests will be local to avoid network latency

- Writes will be tested by loading 36,000 rows of data in 1000 row batch chunks

- Reads will be tested for reading one record and for reading all records

- Tests were conducted on an Intel i5 2.5GHz Windows 7 PC with 4GB ram

SQL Server

Not much to say here really. A standard RDBMS that’s more about one beefy server than scalability and fault tolerance across distributed machines.

The Install

Easy. Download the free developer version and then just run the install program. There were some minor issues with the port configuration but some quick Googling took care of it.

The API

JDBC (via SQL or JPA). Both very well supported and easy to use. I used straight SQL (in a Hibernate Session.doWork()) for the test

Basic code:

Session session = ((Session)entityManagerFactory.createEntityManager().getDelegate());

session.doWork(new Work() {

@Override

public void execute(Connection conn) throws SQLException {

PreparedStatement stmt = null;

try {

stmt = conn.prepareStatement(SQL_INSERT);

for (Data l : items) {

stmt.setString(1, l.getA());

stmt.setString(2, l.getB());

stmt.setString(3, l.getC());

stmt.setString(4, l.getD());

stmt.setInt(5, l.getE());

stmt.setLong(6, l.getF());

stmt.addBatch();

}

stmt.executeBatch();

}

finally {

try {stmt.close();} catch (Exception e) {}

}

}

});

The Times (in seconds)

| Writes (36,000) | Reads (1) | Reads (36,000) |

|---|---|---|

|

|

|

Notes:

SQL Server has something behind the scenes going on that if you don’t hit the DB for 20 minutes or so the first read is significantly slower than the following reads (as is evident above in the first read of a single row). I was able to replicate this behavior multiple times so it wasn’t a fluke.

Cassandra

Used by many big shops (NetFlix,Twitter,Digg, etc). Scalable, fault tolerant, and perhaps the leader in NoSQL based db’s.

The Install

Simple. Extract it, follow the basic instructions online, you’re good to go. I did have to modify a config file as well as do some research to tweak the performance.

The API

Cassandra does not come with a standard API for Java as it’s more about the open source DB side of things than just a all-in-one solution that gives you a specific libraries to use.

I found two libraries that appeared to be popular: Hector and Pelops, so I tried them both 🙂Pelops

Pelops was fairly straightforward to use. Nothing too complex.

Basic code:

Cluster cluster = new Cluster("localhost", 9160);

Pelops.addPool(POOL, cluster, KEY_SPACE);

Mutator mutator = Pelops.createMutator(POOL);

for (Data l : items) {

mutator.writeColumns(COLUMN_FAMILY, l.getA(),

mutator.newColumnList(

mutator.newColumn(B, l.getB()),

mutator.newColumn(C, l.getC()),

mutator.newColumn(D, l.getD()),

mutator.newColumn(E, l.getE()),

mutator.newColumn(F, l.getF())

));

}

mutator.execute(ConsistencyLevel.ANY);

The Times (in seconds) – Pelops

| Writes (36,000) | Reads (1) | Reads (36,000) |

|---|---|---|

|

|

All over 40 seconds. Not worth mentioning |

Hector

Hector had the same basic idea as Pelops. I likes this API a bit more because if followed a more true-to-form Builder methodology.

Basic code:

Cluster cluster = HFactory.getOrCreateCluster(POOL,"localhost:9160");

Keyspace ksp = HFactory.createKeyspace(KEY_SPACE, cluster);

ksp.setConsistencyLevelPolicy(new ConsistencyLevelPolicy() {

@Override

public HConsistencyLevel get(OperationType op) {

return HConsistencyLevel.ONE;

}

@Override

public HConsistencyLevel get(OperationType op, String cfName) {

return HConsistencyLevel.ONE;

}

});

ColumnFamilyTemplate<String, String> template template = new ThriftColumnFamilyTemplate<String, String>(

ksp, COLUMN_FAMILY, StringSerializer.get(), StringSerializer.get());

Mutator<String> mutator = template.createMutator();

String chanId = String.valueOf(channelId);

for (Data l : items) {

String key = l.getA();

mutator.addInsertion(key, COLUMN_FAMILY, HFactory.createStringColumn(B, l.getB()))

.addInsertion(key, COLUMN_FAMILY, HFactory.createStringColumn(C, l.getC()))

.addInsertion(key, COLUMN_FAMILY, HFactory.createStringColumn(D, l.getD()))

.addInsertion(key, COLUMN_FAMILY, HFactory.createStringColumn(E, l.getE()))

.addInsertion(key, COLUMN_FAMILY, HFactory.createStringColumn(F, l.getF()));

}

mutator.execute();

The Times (in seconds) – Hector

| Writes (36,000) | Reads (1) | Reads (36,000) |

|---|---|---|

|

|

|

Notes:

Pelops performed so poorly against Hector that I tried my best to figure out what it was doing differently. Honestly, I couldn’t find any real difference. Pelops generated way more activity on the server than Hector but I couldn’t tell you why.

Mongo DB

Mongo DB is a Document based DB. Think of it (and I’m really simplifying it) as a store where the value is a JSON object behind the scenes. It’s main advantage of Cassandra from what I could find is that is supports N number of indexes while Cassandra only supports a max of 2 (the Column and SuperColumn I believe).

The Install

Simple. Download, install.

The API

I have to say that out of all the API’s I think I like Mongo’s the best. It’s very straightforward and very easy to follow.

Basic code:

Mongo m = new Mongo();

DB db = m.getDB(DB_NAME);

DBCollection dbColl = db.getCollection(TABLE_NAME);

List<DBObject> dbos = new ArrayList<DBObject>(1000);

for (Datal : items) {

BasicDBObject doc = new BasicDBObject();

doc.put(A, l.getA());

doc.put(B, l.getB());

doc.put(C, l.getC());

doc.put(D, l.getD());

doc.put(E, l.getE());

doc.put(F, l.getF());

dbos.add(doc);

}

dbColl.insert(dbos);

The Times (in seconds)

| Writes (36,000) | Reads (1) | Reads (36,000) |

|---|---|---|

|

|

|

Notes:

Wow does it write fast. It was so fast that before I even did my read tests I went into the console to make sure that the data was actually in there.

Redis

Redis is a true key/value pair store. They did just introduce hash’s for storing things a little more conveniently but really you could think of it as a disk backed cache.

The Install

Another easy one to install. Download, install. Nice and easy.

The API

Like Cassandra, Redis has a couple different API’s to choose from. The one I found that seem to have the most support and was the most mature was Jedis.

I have to say that the API methods and the Redis method names themselves are not very straightforward. The names are things like hget, mget, get and while the get is straightforward you would be guessing at what hget and mget are without having to look at the API’s. Maybe it’s just me but I like to be able to tell what something is doing by just looking at it when possible.

I should note now that I couldn’t find a really good way to easily get all the records for the all test. I wanted to treat it as something that would not just have one type of data in it and the only way to do that is via the way you name the keys (since it is a true key/value only system). This meant that the only way I could retrieve all the data for my data set was to look up all the keys that matched my key pattern and then get the individual data per key.

Basic code:

JedisPool pool = new JedisPool(new JedisPoolConfig(), "localhost");

Jedis jedis = pool.getResource();

BinaryTransaction transaction = jedis.multi();

String key = null;

for (Data l : items) {

key = "data:"+l.getA();

// A, B, etc are actually static final strings with .getBytes() called in this case

transaction.hset(key.getBytes(), A, l.getA().getBytes());

transaction.hset(key.getBytes(), B, l.getB().getBytes());

transaction.hset(key.getBytes(), C, l.getC().getBytes());

transaction.hset(key.getBytes(), D, l.getD ().getBytes());

transaction.hset(key.getBytes(), E,String.valueOf(l.getE()).getBytes());

transaction.hset(key.getBytes(), F, String.valueOf(l.getF()).getBytes());

}

transaction.exec();

The Times (in seconds)

| Writes (36,000) | Reads (1) | Reads (36,000) |

|---|---|---|

|

|

|

Notes:

As stated above I had to come up with a funky way to do the all test. This may have impacted the performance as you can see the single read was amazingly fast but the all was rather slow. I did my research and that was the only way I could see it being done. Hopefully in a future version (or if someone tells me another way) they will address this.

Couch DB

A Document oriented DB like Mongo.

The Install

This was nightmarish. First, the distro is basically just the source and you have to build it yourself. I’m on a Windows box and don’t have a compiler so I had to search for a distro I could use. I found two. The first one didn’t even work. The second worked but geeze, because CouchDB is written in Erlang, it installs a lot (so does SQL Server to be fair). Once I got it up and running it gives you a rather nice Web interface to work with it though.

The API

I gave up. Seriously. I spent a few hours just trying to get my demo code to even connect to the server. The documentation was light about setup and the examples I found online were not very helpful. The code was a bit confusing and overly complex as well. Can you tell it just rubbed me the wrong way?

Notes

I wanted to give this a shot as I had heard a lot of good things about it but it was so painful that I just stopped. I also didn’t like the idea of having to make views and register them with the DB so we can use them in code. This is supposed to be radically different than an RDBMS but I’m not so sure.

The Results

I’d like to say there was a clear winner…… but there wasn’t.

This is what the tests showed:(Pelops is not listed, it was just too far out there)

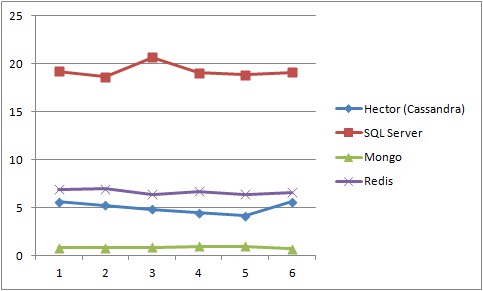

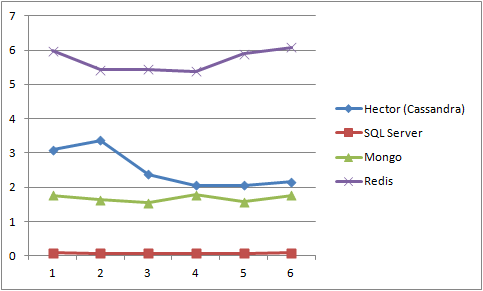

(Y axis = Seconds, X axis = Execution #):

Writes (36,000)

Reads (1)

Note: Notice that first read with SQL Server….

Reads (All)

From the above we can see that:- Mongo has the fastest writes

- Redis had the fastest read for one record

- SQL Server had the fastest read for all records (after the initial first read)

What one would I go with? Mongo. It was great for writing, decent for reads, and very easy to use. The API was very straighforward, the install was simple, the cmd line tools easy to use and understand.

It really comes down to what you are going to use the system for. If the data needs to be very well organized and strict, stick with a RDBMS like SQL Server. If you have some more freedom with your data then I think it comes down to determining if you are going to be reading or writing more. If reading and you are going to be getting specific things (such as data based on a user id) then Redis may be the best solution. If you are going to be grabbing more robust data and it will vary between single or multiple records, Mongo or Cassandra would work (I would favor Mongo).

2 Responses to DB Technology Review (with pretty charts too!)